What Large Language Models Seem to Do — and What They Truly Don’t

We are living in a moment of extraordinary enchantment — not unlike that of the ancients who first saw shadows dancing on the cave wall and mistook them for truth.

Today’s shadows are shaped by statistical models. They complete our sentences, answer our questions, and — we are told — they exhibit emergent intelligence. That at some mysterious tipping point, these vast digital minds begin to reason, to infer, to… awaken.

Let us not be so easily seduced.

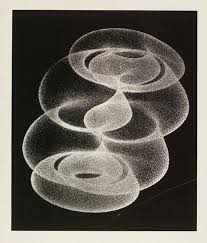

The language of emergence has been borrowed from systems science — where simple rules, applied recursively, give rise to unpredictable, often beautiful, complexity. Flocks of birds, snowflakes, ant colonies. Or in loftier terms: life from non-life, mind from matter.

But when we speak of “emergent abilities” in LLMs, we risk mistaking a quantitative threshold for a qualitative transformation. We confuse scale with soul.

It is not that the model suddenly understands logic puzzles; it has merely seen enough approximations of them to interpolate plausible completions. The curve becomes smoother — not because it gains insight, but because its training set becomes denser. It is not emergent behavior. It is emergent familiarity.

To borrow an older truth: not all motion is progress. A ship circling in fog may still impress us with its speed.

Indeed, what we call emergence in these models is often little more than a performance artifact — a discontinuity in the metric, not in the mind. The machine has crossed a benchmark threshold, not a metaphysical one. Its parameters have grown. Its predictions have sharpened. But ask it why it reasons as it does, and it responds only with more reasoning — not with awareness, not with origin, not with intent.

Let us be precise: this is not intelligence. It is imitation at scale.

And the danger is not in the machine’s illusions, but in our own — in our willingness to accept resemblance as reality. For once we mistake prediction for wisdom, we may begin to believe that we too need not think — that our questions can be answered before they’re even fully formed.